Table of Content

TABLE OF CONTENTS

Antifragility is a strong criterion that says that if we experience shocks or unexpected variances in the inputs, the model performance (by whatever measure of performance you choose to use) will actually improve. Most machine learning models can be made to be reasonably robust, but I have never seen an example of a “classic” machine learning model that is antifragile. Yes, machine learning benefits from exposure to more data and more diversity (when that variability is related to the underlying process) but, obviously, not all shocks lead to improved performance in such systems.

For instance, if you are playing chess to learn, and I come by and smash all the pieces off the board, this is definitely a shock, but it does not improve your ability to learn chess. In the chess example, if I had left you alone instead of smashing your pieces, you would have a better chance of learning the game. Whereas, if instead of smashing the board, I introduced you to a better opponent, your ability to play chess improves over time. So, the stressor of a better opponent (where the stressor contains information about the gameplay), as opposed to a bully (adds no information about gameplay), improves your ability to play chess. A subset of machine learning known as deep learning leverages a technique known as adversarial learning – this is a good example of a system that benefits from an adversarial stressor. This kind of system mimics the aspects of real organic systems that exhibit antifragility.

Nature seems to be organized as a harmonious blend of complexity and antifragility

It is the very complexity of engineered systems that often makes them fragile. In comparison, nature has many examples of complex systems that are also robust and also antifragile. Taleb makes the argument that humans should copy nature in that regard: Engineer systems to be antifragile.

Today, engineers are very experienced in building robust systems, but they have yet to exploit antifragility as a general design principle. One of the critical elements of engineering antifragility in human engineering is to incorporate learning and adaptation into the system. However, this is not a simple thing.

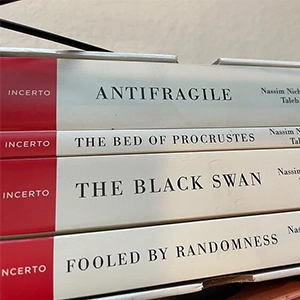

In machine learning, the very fact that we use optimization to arrive at a set of parameters to yield an efficient model means that we pick up fragility along the way. What most people refer to when they casually use the word antifragile seems to be this loose notion that the model is robust so that it does not “break” when subjected to pressure or shock. Nassim Taleb has called this out, i.e., robustness is not the same as antifragility.

Graph structured data (ontologies) are inherently antifragile

Practically, we can build models that retain functionality or utility with increasing disorder. How do we do this? By introducing strong structural priors. If we have a strong enough prior, then a model will be able to ignore some of the noise because the noise is inconsequential in comparison to the strength of the prior. This is intrinsically one of the advantages of using ontologies (graph-structured data) as inputs to machine learning models – the very idea of a structured graph means that we are imposing a strong prior that is determined wholly by how well fit the data are contextually, before feeding those data into a machine learning model. A graph-structured data as input has the nice property that we can set a threshold into what sorts of connections make it into the graph at the outset, i.e., as we modify the threshold, the branches of the graph change dynamically but discretely, where the minimum discrete change is a new node or a new edge, and a typical change is the addition of an entire sub-graph.

If we take historically valid connections and append them to our existing graph, it naturally serves as a stronger contextual prior for our machine learning model. By choosing the threshold for acceptance of new data into the graph, we are also implicitly forcing weak data (data having less contextual support) to be excluded from the modeling process. This means that instead of a curve that decays, we would see a plateau where the noise in the data does not contribute anything to the contextual integrity of the graph. Where it does add value to the graph, the graph becomes a stronger prior, so we force the model to behave sort of like this:

.webp)

This plateau behavior would lead to useful characteristics in our data model, such as that of a self-regulating system in which weak data (characterized by high contextual noise) are pruned out of the model input stream while strong data (characterized by high contextual relationships) are retained and passed on to the model. This is one of the huge advantages of using ontologies or graph-based data structures to drive the next generation of machine learning models and processes. This means when the entropy of the data is high, the model falls back on its best-case prior as the basis of its decision; when the entropy of the data is low, the model sees further gains.

So, the use of ontologies and graph-based data structures can substantially enhance model performance and also protect models from bad data to a large extent. This is precisely the characteristic behavior you want to bake into your processes to avoid certain idiosyncratic risks that result from poor model specification.

On a separate point, I think it is important for people to understand that just because we can name/define a phenomenon does not mean we can necessarily engineer it into existence; nor, having engineered it into existence, should we expect that it can continue to function forever without fundamentally altering the environment in which it operates. In the extremes, we can say that no finite resource-limited system will ever produce infinite gains – there must be a tradeoff. I haven’t fully thought through all the implications of antifragility yet, but I’m leaning towards the intuition that real systems that truly exhibit this sort of behavior are unlikely to exist for very long. I could be wrong. I’m sure that engineering, as a discipline, is actively looking to incorporate such concepts into future-proof system architectures that allow those systems to gain from chaos or disorder.

In our own work, we are looking to architect enterprise intelligence, using what we term as an “intelligence architecture” – essentially an architecture that allows us to append iterative AI-powered learning loops into existing business processes that make them resilient to change, that allows them to develop real-time insights, and which ultimately, lead to antifragile business processes.

Maxwell’s demon

All this talk of antifragility, thermodynamics, and information theory reminds me of a thought experiment proposed by James Clerk Maxwell called Maxwell’s demon. I think the conclusion of that thought experiment has much to say about antifragility and its thermodynamic implications in the real world. You start with a closed system containing red and blue gas molecules zipping along in either direction (left or right). In the middle of this closed container, we have a separation wall with a small pinhole to allow gas molecules to pass. The hole is guarded by a demon that selectively allows red molecules to pass left while only allowing blue molecules to pass right. Over infinite time, this system would gain in order (red left, blue right) until you had perfectly separated the red and blue gas molecules. You can read more about Maxwell’s demon here.

Mathematics can help us to see things abstractly that we may not yet have the means to see physically, and this notion of antifragility is a prime example of one of those things that many people are confused about. That’s ok; as you can see, I am still learning myself:

That said, I believe my intuition is pointed in the right direction.

Is antifragility the perfect solution to every problem? Unclear.

In real systems, especially closed systems, there must be a cost paid for extracting gains from the disorder of the system – so it is very likely that while we extract gains from that entropy, we are doing something fundamentally to destroy/corrupt the system itself so that it implodes or destabilizes itself out of existence. In other words, the antifragility of one thing may come at the cost of the ultimate fragility of something else – at least, I think this might be true. On the other hand, it may turn out that networked (co-dependent, feedback-driven) systems may lend themselves to exhibiting antifragility behaviors due to the very networked nature of those systems – a useful direction to explore – antifragility seems to have a strong link to long term survival.

We know that in social systems, individuals who have a stronger network tend to be more resilient and successful than individuals who remain isolated. We definitely see this in the animal kingdom: Animals that work in groups are more successful and have better survival odds than animals that work alone. Humans are undoubtedly the most successful animals of all, owing largely to their ability to work in groups, to use tools, etc., but as we are rapidly seeing, our dominance and success on the planet has costs to the very planet itself – which goes back to my earlier point that antifragile processes may come at the expense of the fragility of the system of the whole. This means that antifragility may be fated to result in cyclical system behaviors of creation, growth/prosperity, destruction, and renewal.

In one of his talks, Taleb states, “If you love volatility, then you are alive.” I’d be curious to know how robust this definition of life is because then we have to re-evaluate our category for what constitutes something that is “alive”, and then we have to re-evaluate the rights of living things. That is perhaps a topic for another blog.

Retrospectively, in a very tangible way, my career reflects a certain antifragility. Embracing volatility and uncertainty has always led to the greatest rewards. The life of a data scientist is inherently uncertain – you are never guaranteed an insight at the outset of any problem, and few people truly get this. If you can’t embrace that uncertainty the way a rock climber embraces new and challenging routes, you won’t enjoy this career. Personally, I have always embraced problems that were a little too big for me – and that, in my opinion, has made all the difference.

Prad Upadrashta

Senior Vice President & Chief Data Science Officer (AI)

Prad Upadrashta, as Senior Vice President and Chief Data Science Officer (AI), spearheaded thought leadership and innovation, rebranded and elevated our AI offerings. Prad's role involved crafting a forward-looking, data-driven enterprise AI roadmap underpinned by advanced data intelligence solutions.

-2.jpg?width=240&height=83&name=Menu-Banner%20(5)-2.jpg)

.jpg?width=240&height=83&name=Menu-Banner%20(8).jpg)