Table of Content

TABLE OF CONTENTS

“My name is Sherlock Holmes. It is my business to know what other people don’t know.” – Sherlock Holmes

“My name is Sherlock Holmes. It is my business to know what other people don’t know.” – Sherlock Holmes

Overview

Many people think that Data Science looks like this:

Which is why you often see job descriptions for data scientists that list every known tool under the sun, as if that mattered. Data Science is not about tools, it is not about modeling, it is about critical thinking, diagnosis, and prescriptive model-informed decision-making.

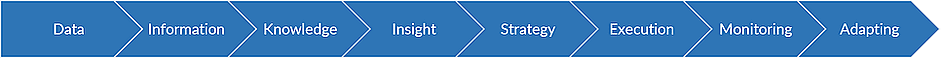

In fact, it actually looks more like this:

Notice how these processes look nothing like one another. The difference in these two views is the reason why your data science practice hasn’t produced unicorn-level ROI. The first 6 components are vital elements of a successful data science practice, while the remaining 2 are key components required for weaving the insight generation process into the business process. The 5th and 6th components, strategy and execution, requires an intimate collaboration between the data science practice and the business. Predicting things is less meaningful when no one understands their true import and, therefore, fails to act on their prescient warnings.

I frequently hear people say “Our models are producing insights.” But, wait, models don’t produce insights. They yield predictions, in line with your best expectations. That is, a model is a best representation of what you already know, expressed more formally without the ambiguity of natural language. Many people take models to be the absolute truth, when in fact a model is merely a bundle of assumptions, many of which don’t apply to specific scenarios. It takes an army of SMEs paired with data scientists to fully understand the implications and appropriate interpretation of model results in a given problem domain, before they can ever be production ready in a black-box system. However, before we talk about production-ready AI, let’s spend some time diving into the word “insight.” Because, I assure you, it will be insightful.

An insight is not merely an observation. For instance, reporting metrics or KPIs is not insightful. Certainly, they can provide insight, but only if you know how to read them. Don’t get me wrong, KPIs provide transparency and some (potentially illusory) measure of control over a complex system’s behavior. KPIs often give you the illusion that “you know” what’s going on. Counter-examples are numerous and include some of the largest epic fails in recent history: The first example is the over-reliance of classic risk models on VAR (Value-At-Risk) which assume that tail events are non-existent (Gaussian assumption), leading to the 2008 financial crisis when credit markets froze, and institution after institution tanked from the weight of being over-leveraged. The second example is the well-known Macondo disaster of 2010, which led to the largest oil leakage in the history of the industry. Essentially, conflicting sensor data on a deep-sea drilling line led to confusion about the condition of the well-head and its completion integrity. Meanwhile, certain corporate finance decisions (cost of overruns, of course) caused BP and its partners to ignore the underlying problem. The back story is extremely well-explained in the movie Deepwater Horizon, so go see it if you have a chance, and call it a free education on the real-world importance of data. Essentially, the corporate decision to prioritize cost over safety and sound engineering judgement, while ignoring the subtle anomalies/conflicts in the sensor log data due to underlying systemic issues, was ultimately catastrophic – resulting in the loss of many lives as well as an epic environmental disaster in which 200 million gallons of oil were released into the ocean with long term impacts on the wildlife in the region. The net cost of the disaster to BP was $18.7 billion in fines, along with the loss of lives, the spillage of oil, etc., …all to save a few million dollars in cost overruns.

So, back to our point, what is an insight? An insight is something that challenges us – it fundamentally alters what we thought was true, and provides us with a subtle edge in understanding and reacting to a situation. If an insight doesn’t disrupt what you thought was true, it isn’t really an insight, it is an affirmation of consensus (or prior bias). And like all consensus, it is often wrong in exactly the right way that leads to major systemic issues, often resulting in otherwise preventable tail events (typically, by convention, left tail events, which are indicative of disaster). No one is worried about winning the lottery, for instance. Whereas, people do occasionally worry that, despite taking all precautions, they are struck down by the hand of nature in a “god-level” event.

As an aside, the risk with bad data scientists is that they become agents of consensus, rather than the watchdogs of good judgement. If they are poor at reading the tea leaves, or worse, lack the courage of their data-driven convictions, they have the potential to become complicit agents of disaster. Luckily, no one pays attention to really bad data scientists anyway.

It is common in the prevailing hype cycle of marketing buzzwords for people to confuse the forest for the trees – and miss the critical distinction between data, information, knowledge, and insight. As a data scientist, to me, these words mean very different things and they are each an integral component of my problem-solving toolkit in the journey from data to insights, and insights to action. Indeed, any data scientist worth their salt should know what these words mean. They should understand why these words matter to the work they do.

Conclusion

To illustrate the difference in these words, I will make use of a toy problem which I like to call, “The Cowboy Problem” where the main objective of the cowboy problem is not to be shot by a sadistic firing squad.

I am showing some of my Texas roots here, minus the hat and horse, of course. Y’all ready for a brain teaser?

To read the next part of the blog, click here.

Prad Upadrashta

Senior Vice President & Chief Data Science Officer (AI)

Prad Upadrashta, as Senior Vice President and Chief Data Science Officer (AI), spearheaded thought leadership and innovation, rebranded and elevated our AI offerings. Prad's role involved crafting a forward-looking, data-driven enterprise AI roadmap underpinned by advanced data intelligence solutions.

.jpg)