Table of Content

TABLE OF CONTENTS

This blog post is the second part of the Data Warehouse Migration to AR series. The first part of the blog post series Data Warehouse Migration to Amazon Redshift – Part 1 details on how Amazon Redshift can make a significant impact in lowering the cost and operational overheads of a data warehouse.

1. Getting Started with Amazon Redshift (AR)

Since Redshift is delivered and managed in the cloud, it is mandatory to have an Amazon Web Services account. AR handles connections from other applications using ODBC and JDBC. Following are the basic steps to get started with AR:

- Setup an AWS account

- Sign up and get started from the Amazon Redshift detail page or via the AWS Management Console

- Install SQL Client Drivers and Tools

- Configure Firewall Rules

- Create a Redshift Cluster

- Create a database schema based on your requirement in the cluster

- Use an ETL framework to populate the Data warehouse

For more details on how to get started with Amazon Redshift for free check the link,

Getting started with Amazon Redshift

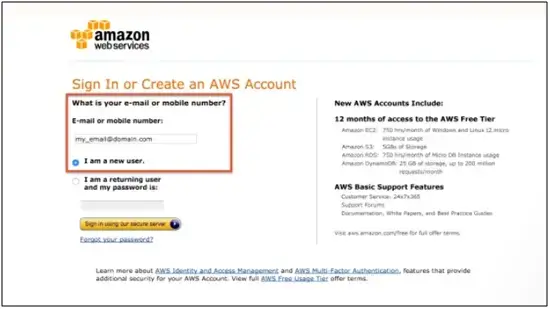

Setting up AWS account

Figure 1: AWS Account Setup

Enter your Payment Information, select a support plan and click Confirm

Click Redshift option on the launch screen to land on the Redshift Dashboard

Setting up your Cluster

The first step to create a data warehouse is to launch a set of nodes, called an Amazon Redshift cluster. Once you plan your cluster, the data set can be uploaded and data analysis queries can be performed. Regardless of the size of the data set, Amazon Redshift offers fast query performance using the same SQL-based tools and business intelligence applications irrespective of the data set size.

Types of nodes to be used play a crucial role while setting up a cluster. There are two types of nodes namely Dense Compute and Dense Storage.

Dense Compute has less storage but it has better performance and speed. When you have more data to be queried, you need a high computing capacity to render the queries. This is the type of instances to use if you need a high-performance data warehouse.

The Dense Storage cluster is designed for big data warehouses. You can create large data warehouses using hard disk drives (HDDs) at a significantly lesser price point.

It is easy to scale your cluster or switch between node types through a single API call or through few clicks in the AWS Console.

Setting up Permissions in Amazon Redshift

Redshift is predominantly used as a data storage warehouse wherein the data will flow in from an external system. Define networking and security settings for your Redshift instance to permission your system to Amazon Redshift in order to send your data.

Ensure that your Redshift Cluster is configured prior to setting up AR permissions. There are two types of Redshift clusters subnets:

- EC2 Classic subnet

- VPC subnet

For more details on how to authorize cluster access, refer Step 4: Authorize access to the cluster

Connecting and setting up Amazon Redshift Integration

In order to send your data you need to connect your Amazon Redshift instance to your source systems. Once it is connected, you can send your transactional, customer, marketing and sales data to any data warehouse like MS SQL Server or PostgreSQL. Add Amazon Redshift as the destination output to send your data.

To make Redshift publicly accessible, you’ll need to whitelist the IPs in your cluster’s network security group. For clusters with a private IP in a VPC, setting up and connecting through a bastion host is required.

2. Pros of using Amazon Redshift

Business Productivity Benefits

- Analyze more data

- Faster time to market

- Get better insights

- Match capacity with Demand

Technical Benefits

- Transition from unsustainable painful operations to efficient cost effective cluster, squeak-free operations, and grab a sizeable reduction of costs. Migration to Redshift from on-premise platform is made possible at a fraction of the cost.

- Single source of truth – Organizations that have high data volumes require a unified and organized Database to handle not only the volume but also act as a source of truth which makes Redshift as the best solution provider.

- Fast – You can run aggregations on hundreds of millions of rows in a few seconds. Very fast query performance due to columnar-storage databases that leverage the Massively Parallel Processing (MPP) capabilities of its data warehouse architecture. Petabyte-scale data warehouse, without any loss in performance and low cost:

- Parallel and Distributed Processing

- Less I/O Storage

- Hardware is optimized for I/O intensive workloads

- Enhanced Networking

- Regular movement of auto fixed enhancements

- Economical

- Easy and Straightforward Pricing

- Leader nodes are not charged

- There are no upfront costs

- Complete governance and control

- Ceaseless/progressive backup of your data to Amazon S3 and across regions

- Streaming restore

- Fault Tolerance

- Amazon Redshift spontaneously recognized and changes any fizzled node in your data warehouse cluster. Hence the substituted note is made available immediately and the recently accessed data is loaded first to continue with querying without any time lag. Since Amazon Redshift reflects the data across the cluster, it utilizes the data from another node to reconstruct the fizzled node.

- Built-in Security

- Data Load is encoded from Amazon S3

- Data in progress is secured by SSL enabled connections

- Amazon VPC allows only connections made from inside the network thus making network isolation possible

- Audit trailing to keep track of the transaction history. You can make use of AWS Cloud Trail to record the account activities

- Vigorous

- Authorizes user-defined functions

- Uses Machine Learning and Data Science

- Service Oriented Architecture (SOA)

- Business Tools such as Tableau is supported

- Supports all the data warehouse core features such as SCD1 and SCD2, and different schemas like the star schema

- Set up a Disaster Recovery (DR) environment with a few clicks in the AR Management Console. Allows you to keep copies of your backups in multiple AWS regions and in the case of a service interruption in one AWS region, you can restore your cluster from the backup in a different AWS region, and gain read/write access to your cluster within a few minutes of initiating the restoring operation

Stay tuned for Part 3 of this 3 part series on Data Warehouse Migration to Amazon Redshift. Please send us a note with your queries and feedback.

Tags

Data-as-an-Asset

Prabhu R Chennupati

Enterprise Consulting Architect

With over two decades of experience spanning enterprise architecture, data and solution architecture, strategic planning, and delivery leadership, Prabhu has significantly guided CDO organizations to develop data architecture strategies and roadmaps for diverse clients.

.jpg)